The Situationists' Walkman - An Audio Augmented Reality Experience

We focussed our research & development work for the CIF2021 award around two eXtended Reality (XR) experiences with a specific focus on 3D or spatial sound. Building on previous projects and research work undertaken with colleagues at BBC R&D, Tim Cowlishaw and I planned a prototype to investigate the use of headtracked, 3D audio to provide the foundation for overlaying exciting sonic artworks, stories and experiences that transport us from, or augment our real world environment.

Goals

We set out to further validate the hypothesis that using Audio Augmented Reality would create a convincing and compelling immersive experience. Unlike computer graphics running on most consumer hardware, the fidelity of recorded audio is great enough that our ears are already convinced by reality of what we perceive. The missing factor is sound emanating from a fixed point in space, and being rendered spatially. In short, sounding as natural as possible and closely approximating what we hear IRL.

There are plenty of headlocked, “spatial” audio experiences but it was important for us to investigate delivery of audio that is headtracked (doesn’t reposition as you move), has the sense of externalisation (the sound in relation to you) and presence (really being there).

Conceptually, we were interested in creating a playful, non-directed experience that offered surprising, uncanny sonic augmentation to the world around you. Using the city as a playground - a canvas upon which we could project directed audio stimulus that’s unconstrained by the physicality and hardware of a gallery setting, augmenting familiar surroundings with another layer of perceptual information. A 3D graphical score with a participant conductor.

One particular reference we kept returning to was the Situationist idea of the dérive - “a mode of experimental behaviour linked to the conditions of urban society: a technique of rapid passage through varied ambiances.” - an undirected, spontaneous exploration of the urban environment. This seemed particularly germane during the months in which we were working on this project - Facebook had just announced their “metaverse” strategy and rebrand, and a lot of the commentary and discourse around the announcement (as well as other artistic references we’d been inspired by) were concerned with the possibility that AR technologies might lead to further enclosure and commercialisation of public spaces. In this context, the idea that we might use the project both as an exploration of both the playful, open-ended possibilities of the technology for artistic expression, and a means of celebrating the importance of open public spaces and expanding them into AR-space, made the idea of a situationist-like intervention even more pertinent.

The result was The Situationists’ Walkman - a digital dérive, playing out within a small area of East London around Arnold Circus. An updated version of the Situationists’ vision using audio led exploration of the urban environment that prompts us to reconsider our relationship to this constructed or delineated space.

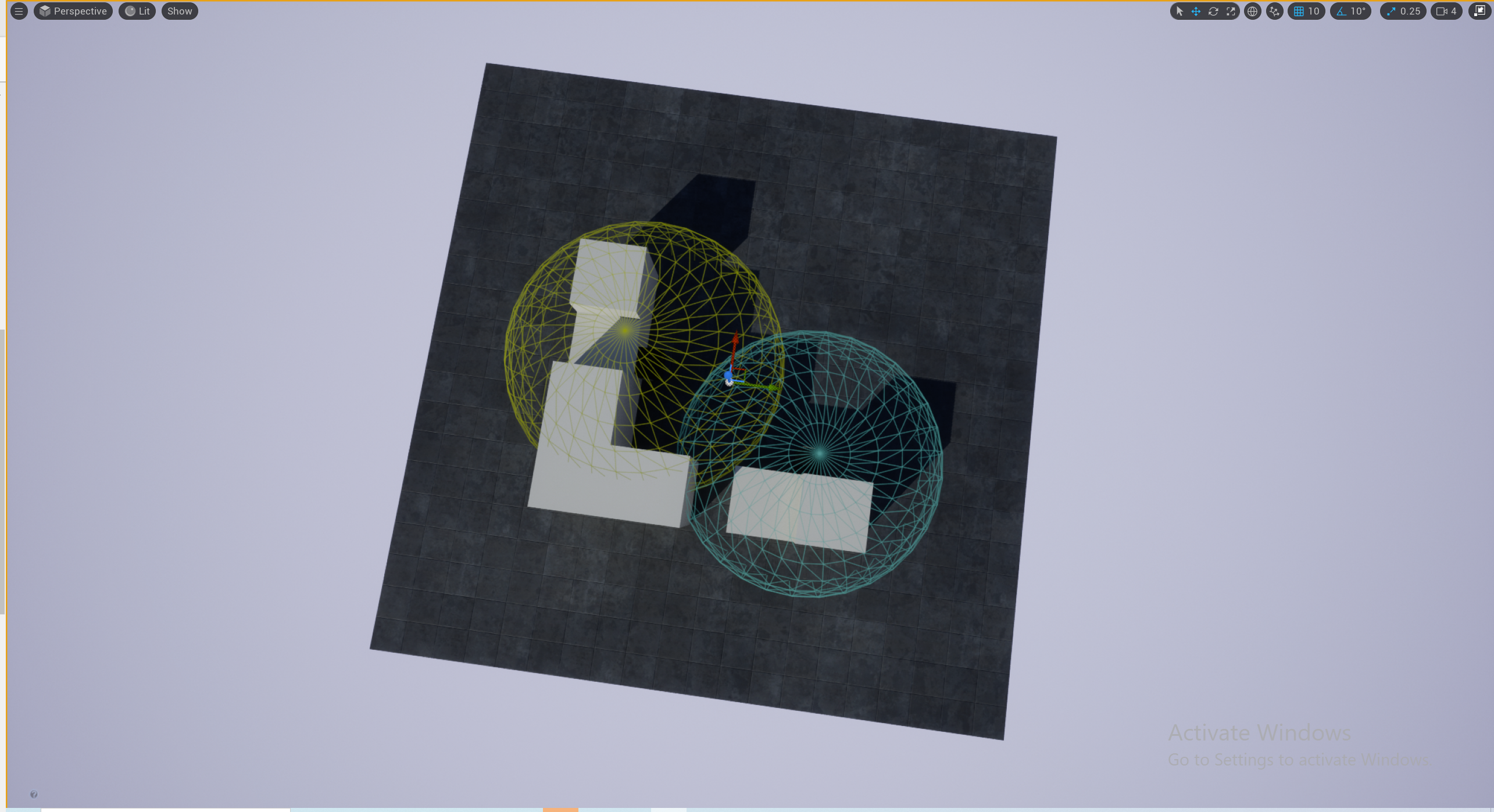

We commissioned a number of artists to make sonic artworks hosted inside overlapping zones around Arnold Circus and the Boundary Estate. Each artist provided a number of individual tracks or stems as well as directions of how to “attach’ them to the topography of the zone.

Strategy

The WWDC21 announcement of the PHASE audio engine from Apple really piqued our interest. That and the head tracking available via their Airpod headphones pointed at tools worthy of investigation for the project.

We evaluated numerous software environments (honorary shout out to Roundware) and frameworks en route to the prototype and what became clear is we had a number of challenges to solve:

- Listener location - accurately position them in world space

- Listener orientation - the direction of their head/ears

- Virtual speaker positioning

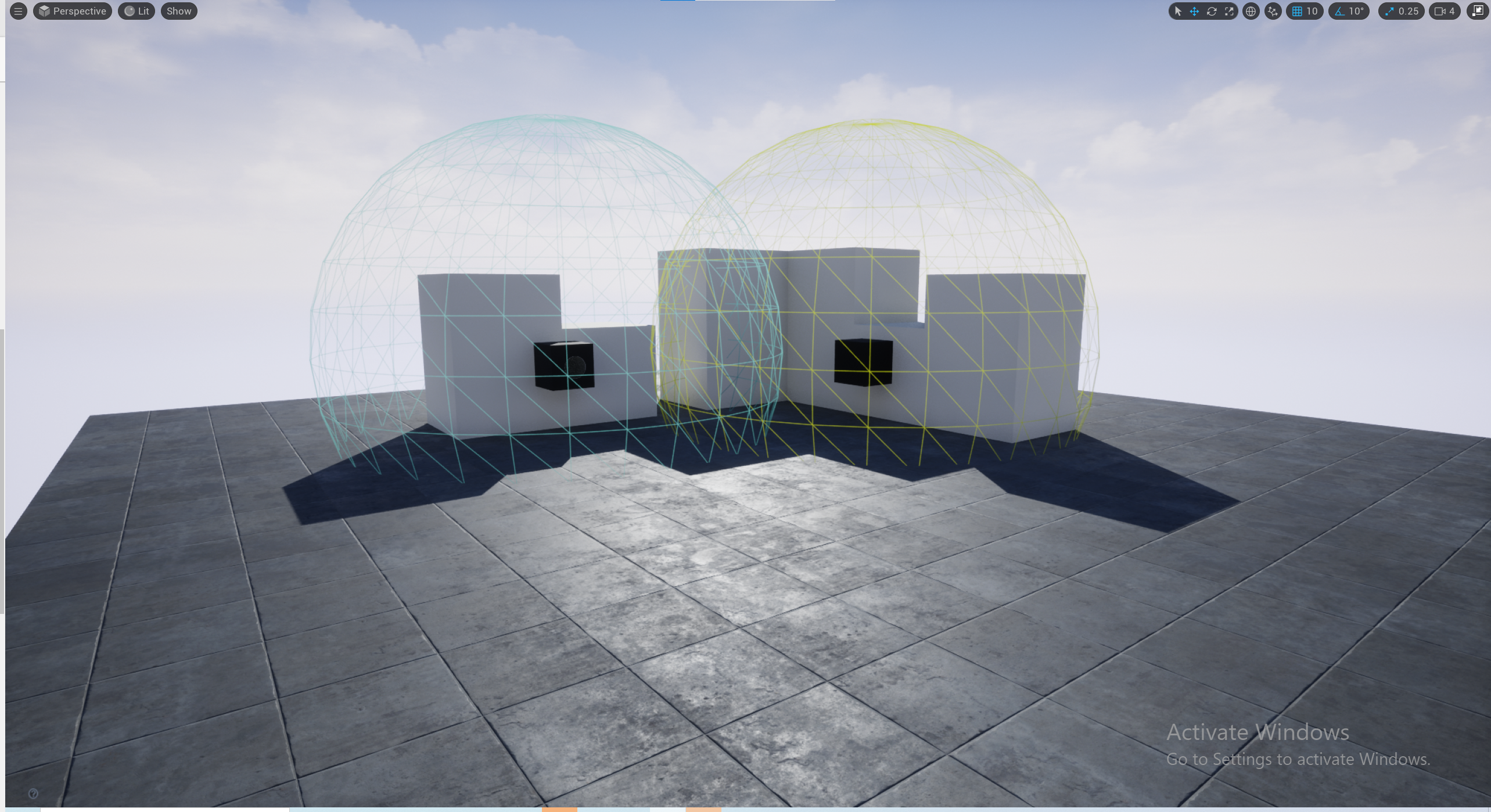

- Sound emission shape, direction and attenuation

- Sound occlusion

- 3D or spatial audio

Modern game engines such as Unity and Unreal offer the tools to design audio experiences in a constructed, virtual environment and provide all of the properties listed above. That is, assuming you design a sound stage and navigate around it in a fully immersed Virtual Reality environment via a hardware headset. We require a similar experience but instead, overlaid IRL. One of our guiding principles is for the technology to disappear into the background so the participant can become fully immersed, without getting distracted by the medium. It seemed like a good time to refamiliarise ourselves with these tips for creating audio AR experiences from Henry Cooke and BBC R&D friends before kicking off to avoid any pitfalls they uncovered.

You can take a deeper dive into the technology we used and how we arrived at the final experience over at this post.

Results

After a lot of work finessing onsite, we had an experience that we were really happy with! It’s very effective wandering around and discovering augmented sounds emanating from various points around the site.

Here’s a DIY video of part of the experience. It can’t really capture what it feels like to explore the site but will give you a sense of it. Make sure you wear headphones otherwise the spatial effect will be lost.

During testing, we noticed a really uncanny perceptual side effect whereby after exposure to augmented audio, particularly any material that was clearly not coming from the local environment (that was not always obvious), your ear really tuned back into the natural sounds in a way that focussed you sharply back into the location - almost like a heightened state of sensory perception!

Another interesting observation and an area we’d explore further is we preferred the experience without the Airpods head tracking data! This is counterintuitive but having the hardware device held against your chest meant limiting the amount your head can turn before you twist your body anyway. There also seemed to be some lag between quick head movement and the soundstage reorientation.

We plan to run some tests with a small group of participants onsite in March. After that, we’ll release the experience to a wider group via Test Flight before pushing to the App Store. Drop us a line if you’d like to be part of the test groups and get early access! N.B. - you’ll need a LIDAR capable Apple device and iOS 15 or later.

Thanks and credits

Concept, Design and Development by: Matt Spendlove & Tim Cowlishaw

Featured artists:

- Elvin Brandhi

- Tim Cowlishaw & Constanza Piffardi

- Sally Golding

- Mark Harwood

- Nick Luscombe

- The Nonument Group

- Ruaridh Law

- Spatial & Oliver Coates

View a map of the participating artist zones here.

Produced by Cenatus with the generous support of the Innovate UK Creative Industries Fund.

Thanks to Dave Johnston, Irini Papadimitriou and Henry Cooke for mentoring and advice.

If you’re interested in our work you can follow along here or please get in touch if you need some help designing spatial audio experiences!

published on 09 Feb 2022

This project is gratefully supported by Innovate UK, the UK’s innovation agency.