Building an FM Synth with MetaSounds

My initial exploration into DSP and 3D audio with game engines mostly focussed on Unity but I noted at the time that Epic’s Unreal Engine was actually way ahead in terms of native DSP and procedural audio. Fast forward to mid 2021 and Epic announced MetaSounds, the latest iteration and culmination of a lot of hard work modernising the audio in their engine! They describe it as follows:

MetaSounds are fundamentally a Digital Signal Processing (DSP) rendering graph. They provide audio designers the ability to construct arbitrarily-complex procedural audio systems that offer sample-accurate timing and control at the audio-buffer level.

This is a huge leap forward and they provide a graphical patch editor to wire together low level audio components to generate and process sound. These patches can then expose parameters to the engine runtime and enable tight integration between gameplay (or experience play) and sound. The UI is similar in concept to Pure Data or Max MSP and clearly targets sound designers and composers. Colour me very interested!

I really wanted to explore the possibilities for use in immersive environments so as part our CIF2021 R&D work I proposed a prototype to research the use of procedural audio in creating an audiovisual artwork that can adapt and transform in real time within a Virtual Reality “activated environment”, based upon user exploration and interaction.

Creative possibilities

The potential of this style of integrated software is HUGE and affords the kind of control we can only dream about when creating physical gallery installations or expanded cinema work. My experience building audiovisual performances taught me that fundamentally we’re working on a perceptual level using psychophysical principles. Our brains are fantastic pattern matching systems so providing the right cues and mappings can lead to convincing, integrated multi sensory experiences.

A simplistic, concrete example would be using synthesiser envelope data to shape audio and visual stimulus in unison. When used in audio synthesis, an envelope represents the change in some value over time. Mapping that same value data to some visual property e.g. the opacity of a primitive shape, can really bring the visual to life, make the animation dance and solidify the connection in our brains.

Technology overview

As you can see in the header image, MetaSound patches are a graph of nodes and connections that represent an audio signal path. The editor provides access to insert and connect these nodes to build out the patch.

At the time of writing, MetaSounds are only available via plugin as part of the Unreal Engine 5 early access program and are under active development. Our research took place on the EA2 branch but I know from following #metasounds channel on Discord that the API has changed a bit after EA2.

It’s very crucial to grasp that source nodes in your MetaSounds graph could be traditional, static audio wave files for sample playback and processing but far more interestingly can be waveform generators like the classic Saw, Sine, Square, and Triangle shapes. Combining audio sources with filters, envelope generators, LFOs, mixers and maths functions provides building blocks to create everything from familiar subtractive synths to elaborate, custom hybrid synth patches - all with a set of exposed interface parameters that can be controlled by anything else in the game engine.

Alongside these prebuilt nodes exposed via the patching UI, it’s also possible to create your own nodes by writing some C++ and including them in a manual, source build of the engine.

FM Synth

The classic audio generators handily include a frequency modulation input for FM synthesis techniques so I decided to build an FM synth patch as the basis for my interactive experiments.

In FM synthesis, we use a “modulator” signal to modulate the pitch of another “carrier” signal that’s in a similar audio range. This modulation creates new frequency information in the resulting sound, changing the timbre or colour of the sound by adding more partials.

In recent years, I spent time learning synthesis techniques in greater detail via hardware modular systems and software like Supercollider. The latter is an incredible open source real time, interactive synth engine and an essential learning resource for DSP. I revisited Eli Fieldsteel’s essential course materials, focussing on the FM lessons (part 1 & 2) and worked on porting that across into a MetaSounds patch.

I won’t repeat the instructions here but if you follow along with his video it should be simple enough to translate. I’d encourage you to do so particularly if you want to get a sense of the wide range of potential sounds from even the most basic FM synth whilst getting a demonstration of how powerful randomising the control values can be for sound design. This is the crux of why procedural audio in game engines is so appealing for developers.

FM Basic

In the short video below, we can see the MetaSounds implementation of Eli’s basic FM synth from Supercollider:

FM Ratio Index

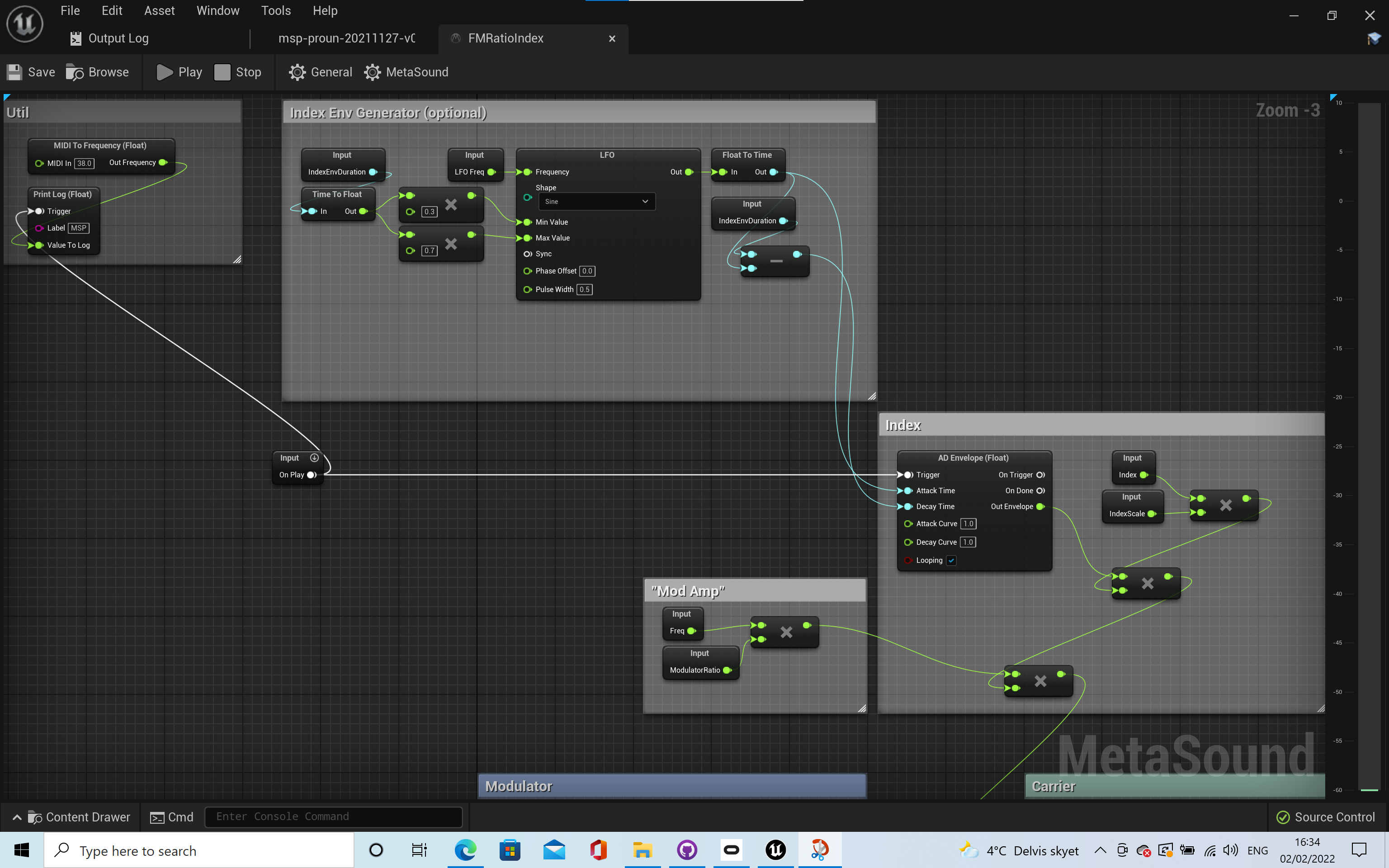

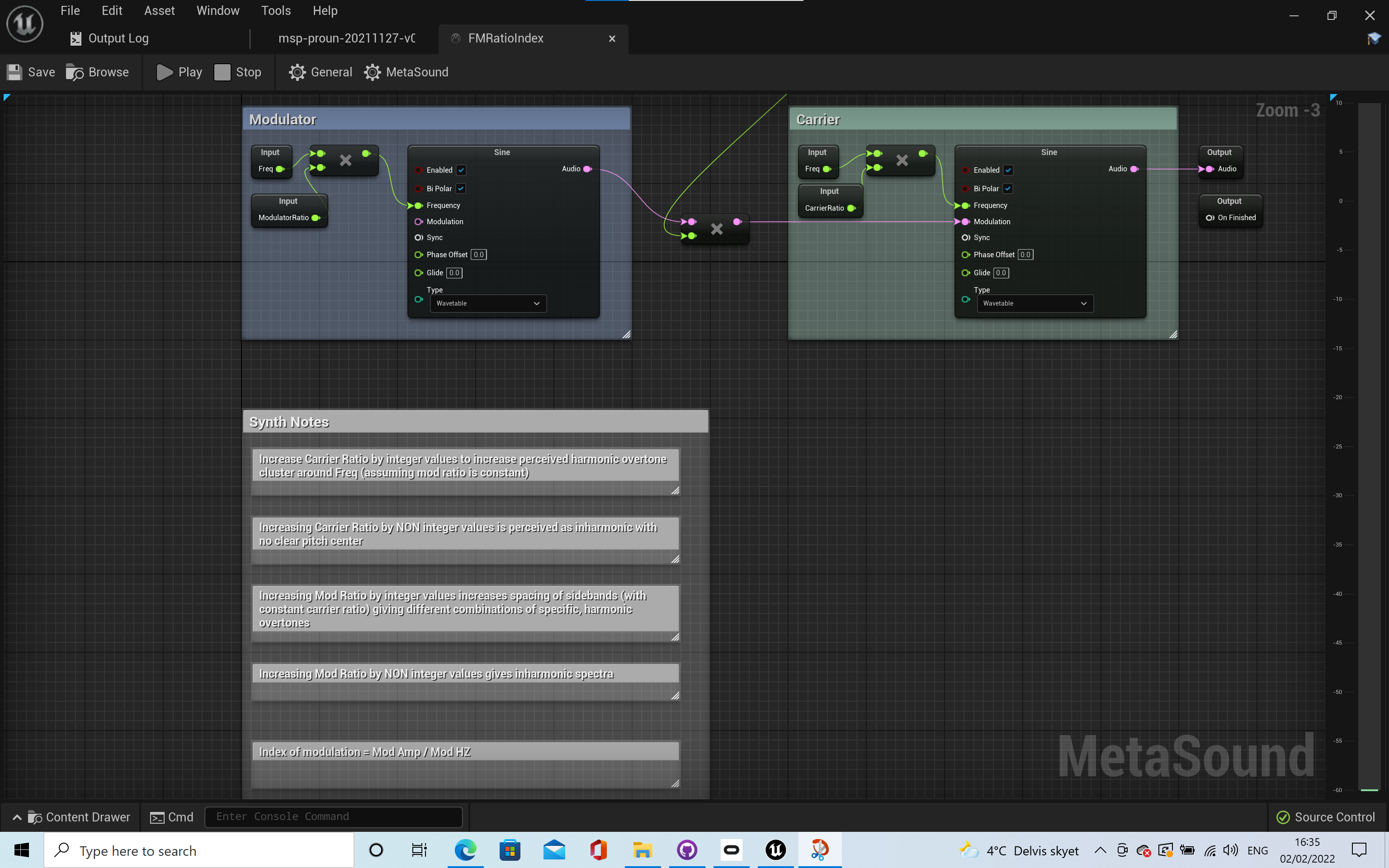

Following on from that, a slightly more advanced example from the second lesson. We use this as the basis for further experiments. You can use following screenshots to recreate the patch:

The playlist below demonstrate the effect of changing the modulator, carrier ratios and other inputs:

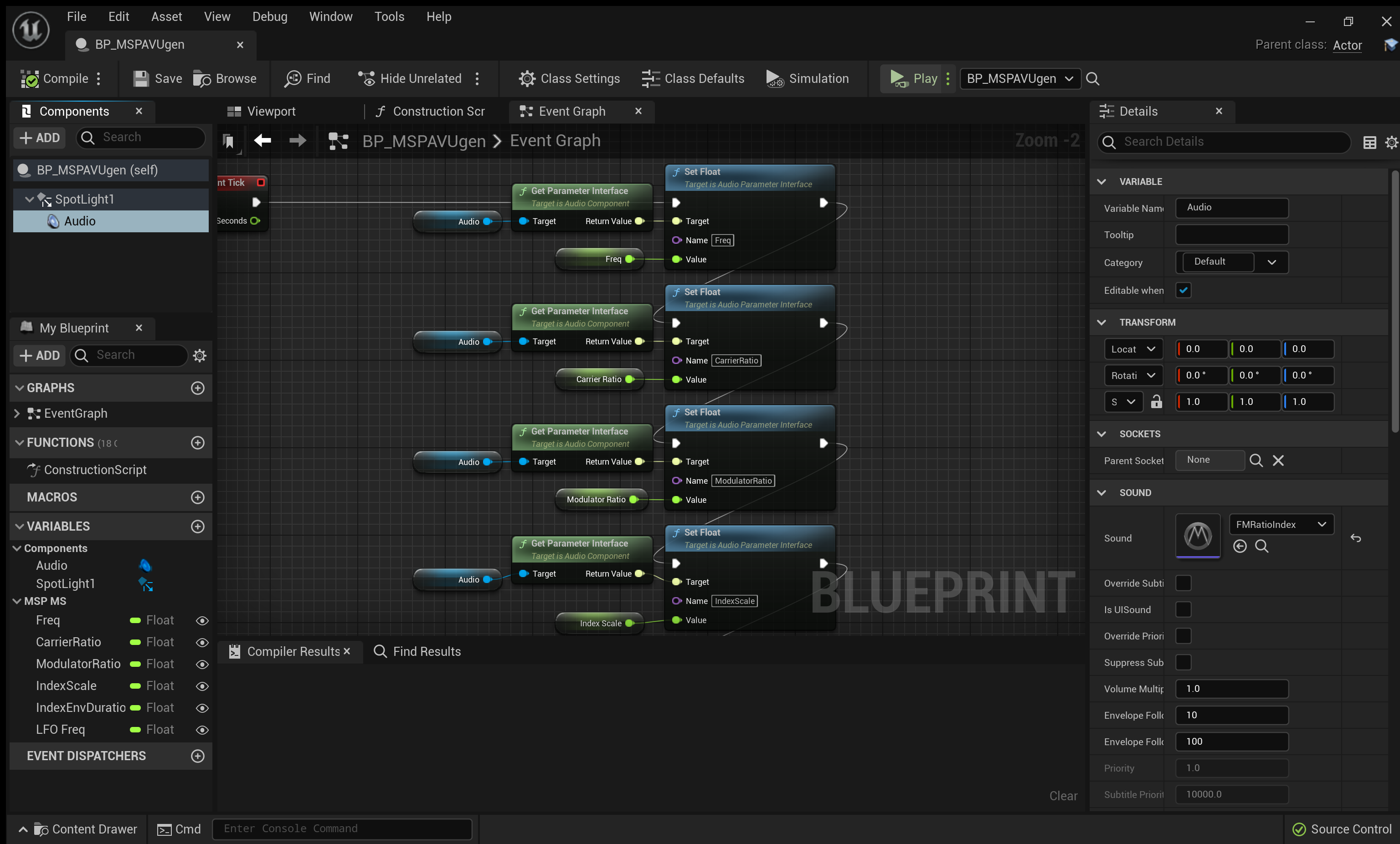

Blueprints

All the examples so far show patching and playback directly from within the MetaSounds editor. To actually publish the MetaSound inputs to other components in UE5 for runtime control, you currently need to wrap the MetaSound into a Blueprint. You can see an example below where we wrap and expose the MetaSound inputs as Blueprint variables:

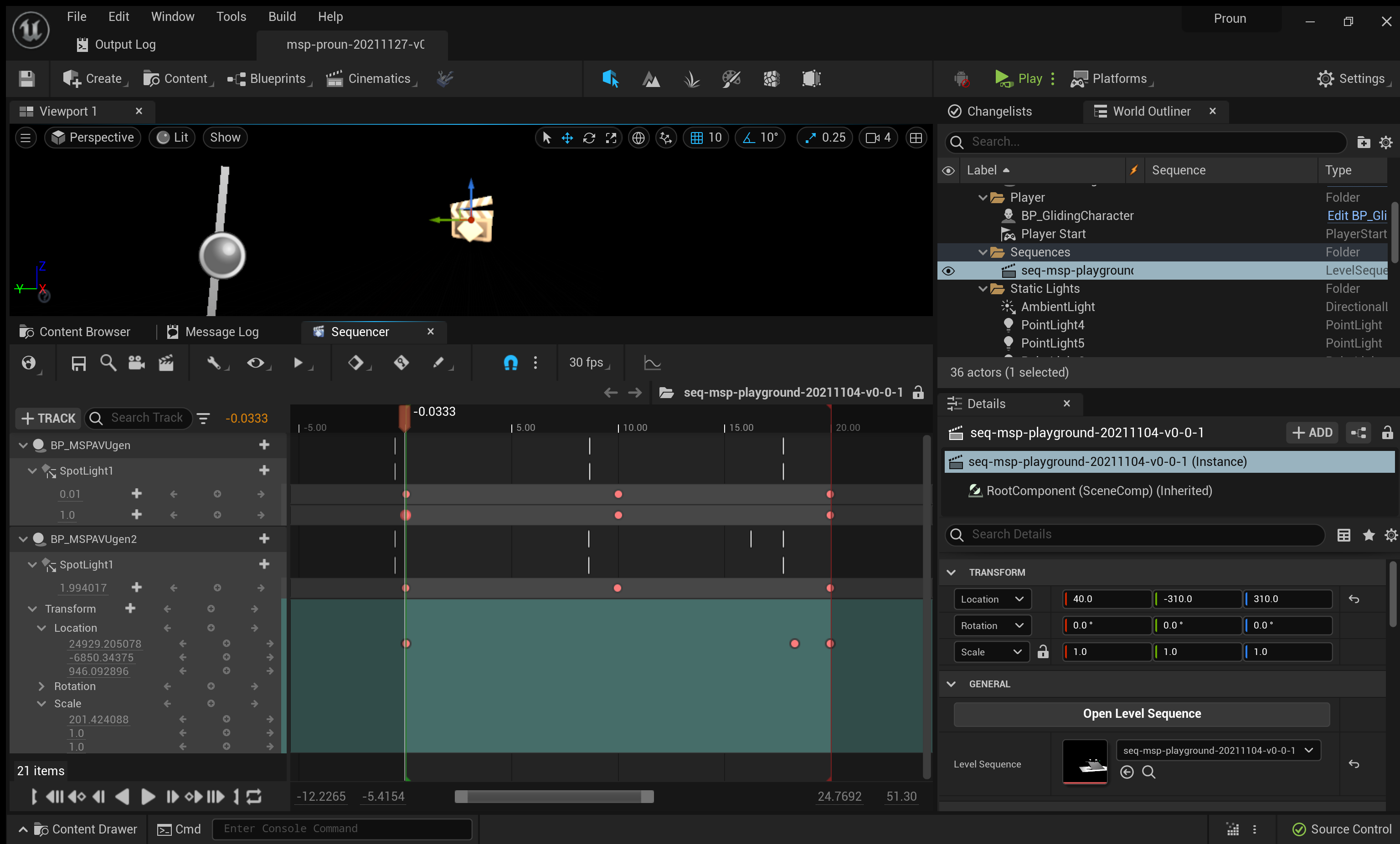

The next screenshot shows an example of using the UE5 sequencer tool to modulate some of those parameters over time. You could, of course, use any aspect of your game to manipulate the synthesiser:

Summary

We’ve seen how we can build synth patches in MetaSounds and ported an FM Synth from Supercollider over to learn the basics of FM synthesis on the way. Stay tuned for further posts where we’ll show some of the work we built utilising these techniques!

published on 05 Feb 2022

This project is gratefully supported by Innovate UK, the UK’s innovation agency.