Sprint 3 - Applied Learning

Creative Coding in Unity

I started Sprint 3 keen to build something and revisited the creative coding tutorial in Unity recommended by David Johnston at the beginning of the project. The thing that initially struck me about this tutorial is how it stripped back the Unity ceremony and “complexity”, the latter is actually aimed at simplifying the process for game devs but can be daunting for a new user with so many options available. The focus here is on pixels and a canvas, taking you a little closer to the metal which aligns with previous experience of Open Frameworks and Processing.

What follows is the notes I made for each episode whilst reviewing the tutorial. This is mostly for my own reference but could be helpful if you need to dissect the basics of creating dynamic animations in Unity. I’ve included a direct video link and Github commit for each section, where relevant

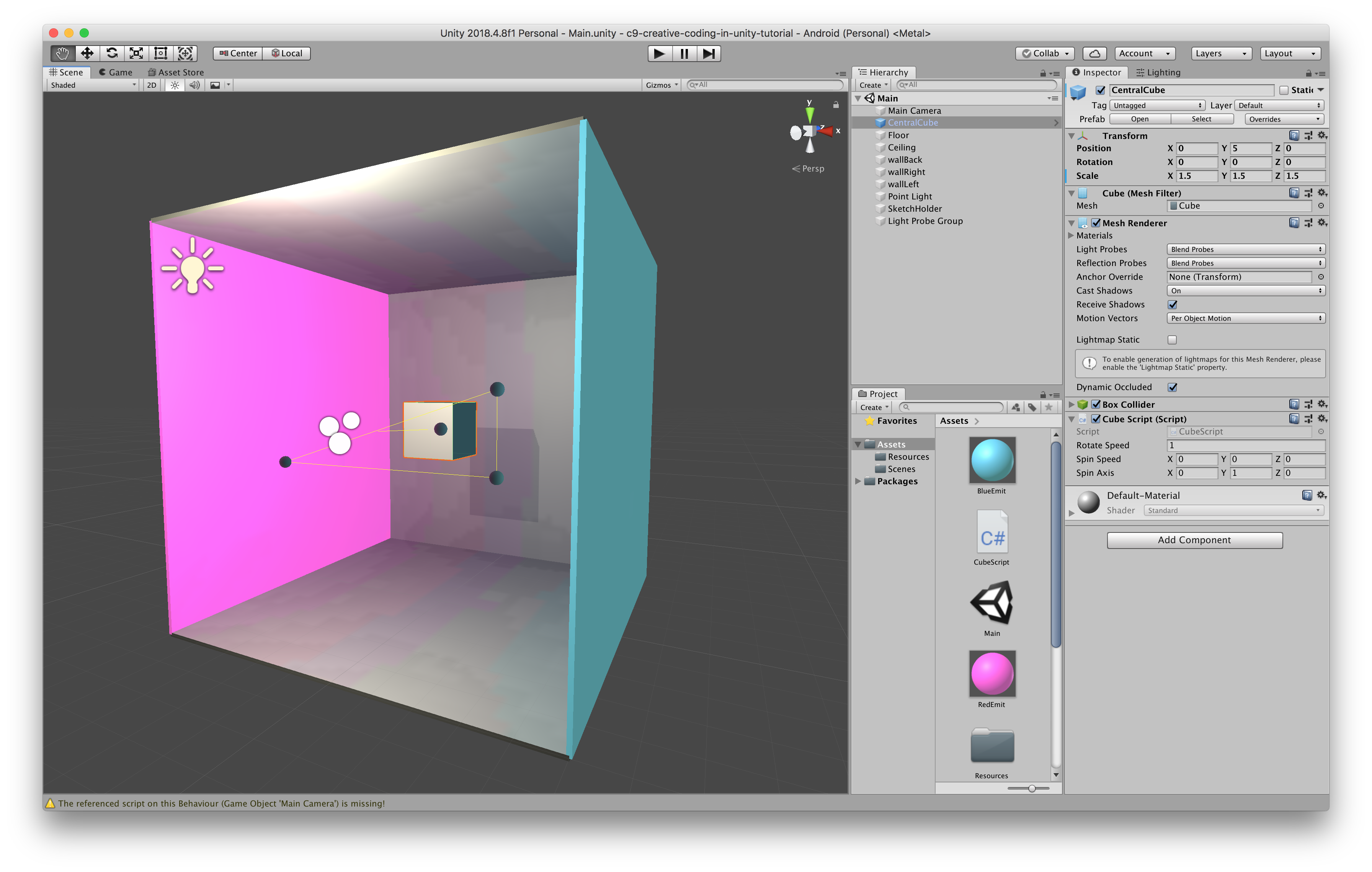

Episode 1 helps to orientate you inside the virtual space, explaining your development perspective, the camera perspective and the default skybox. It guides you through the creation of a Cornell Box, and helps explain the default lighting within a scene and how you can take control of it.

Episode 2 brings the focus inside the Cornell Box, setting up our own lighting and dealing with optimisations like light prerendering. It also introduces the concept of reusable materials that can be applied to any object in your scene - specifically those that emit light in this case, which are really powerful.

*mostly Unity metadata so not much to read, subsequent commits are more useful.

Episode 3 deals with attaching scripts to game objects and the basics of manipulating object properties via transforms over time to create animation. Also fundamentally, the decoupling of scripts and objects and how you must apply a script to an object to get it running. Another core concept here is publishing public attributes within a script that are made available in the Unity editor or other code clients for dev and runtime interaction.

Episode 4 continues the familiarisation of animating an object in space. The important concept introduced is the creation of a prefab - essentially a prototype game object and script that can be reused from other scripts. In this instance, we create “spinning cube” prefab to allow us to add many cubes to the scene from one reusable definition.

Episode 5 is about creation and management of game objects programmatically. Now that we have a reusable spinning cube component or prefab we need some code to manage the instances. That’s where the game controller or sketch object (in line with Processing nomenclature) comes in - this is the entry point to our scene.

Episode 6 is more of a general creative coding / animation tutorial covering trigonometric functions and easing techniques. Very useful background and not specifically related to Unity but still really valuable.

Episode 7 covers processor efficient techniques for ensuring dynamic prefabs respond correctly to static lighting via Light Probes - a mechanism to pre render light at specific node positions that objects can inherit.

Episode 8 is the final in the series and reviews the work so far before investigating post processing camera effects such as Bloom and Depth Of Field by using pre existing packages for the first time. The tutorial is a little out of date regarding post processing effects so you can read an up to date guide over at the Unity docs.

The tutorial wraps up with a couple of resources that inspired the series. Cat Like Coding by Jasper Flick and the Holistic Game Development book by Penny de Byl.

I really learned a lot from these and felt much more confident in Unity on completion. I hope you find the breakdown in my notes useful too. Many thanks to Rick Barraza for the series!

Prototyping Concepts

Energised by the tutorial I started thinking about what I might like to make. An obvious follow up to this would be to port a version of my Transduction project. The geometric animation would be great as a 360 degree XR experience and I now understand the basics of how to implement that.

The event pattern generation for the original project is created via Tidal Cycles and fed to Supercollider (the audio engine) and Processing (the visual engine). It would be pretty trivial to keep a similar architecture and just swap Processing out for Unity as the transport protocol is all OSC, but I’d like application to be self contained in the Oculus Quest.

I’d like to start with event pattern generation and DSP audio engine inside Unity which maybe less dynamic (i.e. there’s no livecoding interface) but I’m sure I can still create something satisfying. The next challenge is to look into DSP and 3D Sound options for Unity so I’ll tackle that next time.

published on 29 Sep 2019

This project is gratefully supported by the Arts Council England as part of their DYCP. fund.